Although caching can be used for many different use cases, it takes some forward planning to fully utilize caching. Consider the following inquiries while determining whether to cache a certain piece of data:

Contents

Is using a cached value secure?

In various contexts, the same piece of data may have different consistency requirements. Caching might not be acceptable, for instance, if you need the item’s authoritative price during online checkout. However, on other pages, users won’t notice if the price is a few minutes out of date.

Does caching work with the data?

Some applications produce access patterns that are unsuitable for caching, such as sweeping through a big dataset’s key space while it is frequently changing.

In this situation, maintaining the cache could cancel out whatever benefits it could have.

Does the data have a good caching structure?

It is frequently sufficient to just cache a database record to provide noticeable speed benefits. Sometimes, though, it makes more sense to cache data in a format that combines numerous records. Caches are straightforward key-value stores, thus you might need to cache a data record in a number of different formats so you can retrieve it using various record properties.

You don’t have to decide on all of these things right away. Consider these recommendations as you increase your use of caching when determining whether to cache a certain piece of data.

Caching design patterns

Lazy caching

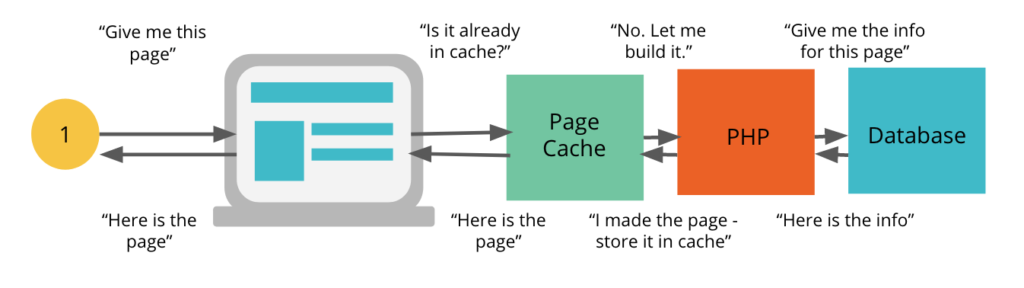

The most common type of caching is known as lazy caching, also known as cache-aside. Any effective caching strategy should be built on laziness. The basic principle is to only fill the cache with objects when the application actually requests them. The general application flow is as follows:

- A request for data, such as the top 10 most recent news stories, is sent to your app.

- To determine whether the object is in the cache, your program checks the cache.

- In this case, the call flow finishes, and the cached object is returned.

- If not (a cache miss), then the database is searched for the object. The object is returned after the cache has been filled.

Compared to other approaches, this one has a number of benefits:

- Only the objects that the application actually requests are kept in the cache, which helps keep the cache size under control. Only necessary additions of new items are made to the cache. Then, when your cache fills up, you may manage your cache RAM passively by letting the engine you’re using automatically remove the least-used keys.

- The lazy cache method will automatically add objects to new cache nodes when the application first requests them as additional cache nodes go online, for example as your application scales up.

- When a cache expires, all that has to be done is to delete the cached object. The next time an item is requested, a new object will be retrieved from the database.

- There is a wide understanding of lazy caching, and many web and app frameworks include support out of the box.

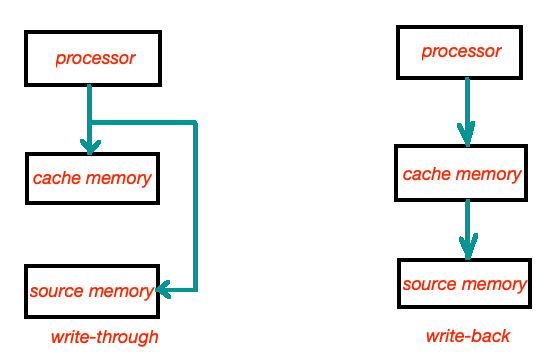

Write-through

When the database is changed in a write-through cache, the cache is instantly updated. This means that whenever a person updates their profile, the updated profile is also sent to the cache. If you have data that you are certain will be retrieved, you might think of this as being proactive to prevent needless cache misses. Any kind of aggregation, such as a list of the top 100 video game players, the ten most-read news pieces, or even recommendations, is an excellent example. It’s simple to update the cache as well because this data is often updated by a single piece of application or background task code.

This strategy provides some benefits for the lazy cache:

- By preventing cache misses, the program can operate more efficiently and feel faster.

- Any application lag is transferred to user data updating, better aligning with user expectations. Contrarily, a string of cache misses may convey the impression that your program is simply slow to the user.

- Cache expiration is made easier by it. The cache is constantly current.

Write-through caching, however, also has a few drawbacks:

- It is possible for the cache to become overflowing with useless things that are never used. Additional memory may be used, and unneeded items could push more useful ones out of the cache.

- If specific records are updated frequently, there may be a lot of cache files.

- Those objects won’t be in the cache if or when cache nodes fail. You need a method, such as lazy caching, to replenish the cache of missing objects.

- As might be evident, since these problems are related to the opposing sides of the data flow, you can combine lazy caching and write-through caching to assist address them.

The two strategies work well together because write-through caching populates data on writes while lazy caching catches cache misses on reads. This is why it’s frequently preferable to think about lazy caching as a framework that you can use throughout your project and write-through caching as a focused improvement that you utilize in particular circumstances.

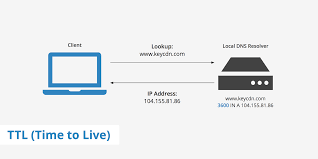

Time-to-live TTL

Cache expiration can quickly become very complicated. We were only using one user record in our earlier samples. In a real app, a given page or screen frequently caches a ton of different information simultaneously, including user profiles, the hottest news headlines, suggestions, user comments, and more, all of which are continually updated using various techniques.

Cache expiration is a major branch of computer science, yet there is no magic solution for this issue. However, there are a few straightforward tactics you can employ:

- To all of your cache keys, with the exception of those you are updating using write-through caching, always apply a time to live (TTL). You may utilize several days or even several hours. When altering an underlying record, you could neglect to update or delete a specific cache key. This method handles certain errors. The cache key will eventually expire and be renewed.

- Set a short TTL of a few seconds instead of adding write-through caching or complicated expiration logic for quickly changing data such as comments, leaderboards, or activity streams. If you have a database query that is getting hammered in production, it’s merely a few lines of code to add a cache key with a 5-second TTL around the query. While you consider more elegant alternatives, this code can be a great Band-Aid to keep your application functioning.

- Russian doll caching, a more recent approach, is the result of work done by the Ruby on Rails team. In this pattern, the top-level resource is a collection of these cache keys, and nested entries are maintained with their own cache keys. Imagine you run a news website with user comments, stories, and users. According to this method, each of those is its own cache key, and the page separately requests each of those keys.

- If you’re unsure if a particular database update will affect a cache key or not, simply delete it. Your foundation for lazy caching will update the key as necessary. Your database won’t suffer any more in the meantime than it did before caching.

Evictions

The engine chooses which keys to evict in order to manage its memory when memory is overfilled or exceeds the cache’s maximum memory setting. The eviction policy that is decided determines the keys that are selected.

The volatile-lru eviction policy is what Amazon ElastiCache for Redis sets as the default for your Redis cluster. With an expiration (TTL) value set, this policy chooses the keys that have been used the least recently. There are several eviction rules that can be used using the customizable max memory-policy argument. The following is a succinct summary of eviction policies:

- allkeys-lfu: The cache evicts the least frequently used (LFU) keys regardless of TTL set

- allkeys-lru: The cache evicts the least recently used (LRU) regardless of TTL set

- volatile-lfu: The cache evicts the least frequently used (LFU) keys from those that have a TTL set

- volatile-lru: The cache evicts the least recently used (LRU) from those that have a TTL set

- volatile-ttl: The cache evicts the keys with the shortest TTL set

- volatile-random: The cache randomly evicts keys with a TTL set

- allkeys-random: The cache randomly evicts keys regardless of TTL set

- no-eviction: The cache doesn’t evict keys at all. This blocks future writes until memory frees up.

Source: click here

Consider the data stored in your cluster and the results of key eviction as a solid technique for choosing an acceptable eviction policy.

For basic caching use cases, LRU-based policies are more typical, however, depending on your goals, you might wish to employ a TTL- or Random-based eviction policy if it better satisfies your needs.

Additionally, if your cluster is suffering evictions, you probably need to scale out (add more nodes to the cluster) or up (use a node with a higher memory footprint) in order to accommodate the extra data. If you are intentionally using an LRU cache, also known as a cache engine that manages keys through eviction, then there is an exception to this rule.

Conclusion

Finally, it may appear that you should just cache your expensive calculations and heavily used database queries, but other components of your app may not benefit from caching. In reality, in-memory caching is quite helpful because even the most highly optimized database query or remote API request takes significantly longer to complete than retrieving a flat cache key from memory. Just remember that cached data is old data by definition, therefore there may be situations where it is inappropriate to access it, such as when making an online purchase and querying an item’s pricing. To determine whether your cache is efficient, you can keep an eye on statistics like cache misses.